From New York Press, March 25, 2003

Among the publishing sensations of 1836 was a book by one Maria Monk entitled Awful Disclosures, which purported to be her memoir of life in a Montreal nunnery. Hot stuff by early 19th-century standards, Monk’s book claimed that all nuns were forced to have sex with priests and that the “fruit of priestly lusts” were baptized, murdered, and carried away for secret burial in purple velvet sacks. Nuns who tried to leave the convent were whipped, beaten, gagged, imprisoned, or secretly murdered. Maria claimed to have escaped with her unborn child.

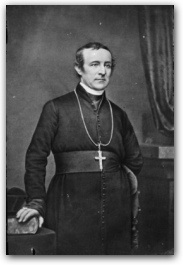

In fact, Maria had never been a nun. She was a runaway from a Catholic home for delinquent girls, and her child’s father was no priest, but merely the boyfriend who had helped her escape. Nevertheless, Awful Disclosures became an overnight bestseller, echoing as it did the most popular anti-Catholic slanders of the day and reflecting the savage hatred of the Irish with which they went hand in hand. It was the cultural climate that partly led to John Joseph Hughes, fourth bishop and first archbishop of New York, becoming what one reporter called “the best known, if not exactly the best loved, Catholic bishop in the country.”

John Hughes was an Irishman, an immigrant and a poor farmer’s son. Though intelligent and literate, he had little formal education before he entered the seminary. He was complicated: warm, impulsively charitable, vain (he wore a wig) and combative (he once admitted to “a certain pungency of style” in argument). No man accused him of sainthood; many found him touched with greatness. He built St. Patrick’s Cathedral and founded America’s system of parochial education; he once threatened to burn New York to the ground. Like all archbishops and bishops, Hughes placed a cross in his signature. Some felt it more resembled a knife than the symbol of the redemption of the world, and so the gutter press nicknamed him “Dagger John.” He probably loved it.

Born on June 24, 1797 in Annaloghan, County Tyrone, Hughes later observed that he’d lived the first five days of his life on terms of “social and civil equality with the most favored subjects of the British Empire.” Then he was baptized a Catholic. British law forbade Catholics to own a house worth more than five pounds, hold the King’s commission in the military or receive a Catholic education. It also forbade Roman Catholic priests to preside at Catholic burials, so that—as William J. Stern noted in a 1997 article in City Journal—when Hughes’s younger sister Mary died in 1812, “the best [the priest] could do was scoop up a handful of dirt, bless it, and hand it to Hughes to sprinkle on the grave.”

In 1817, Hughes emigrated to America. He was hired as a gardener and stonemason by the Reverend John Dubois, rector of St. Mary’s College and Seminary in Emmitsburg, Maryland. Believing himself called to the priesthood, Hughes asked to be admitted to the seminary. Father Dubois rejected him as lacking a proper education.

Hughes had met Mother Elizabeth Ann Bayley Seton, a convert to Catholicism who had become a nun after her husband’s death and occasionally visited St. Mary’s. She saw something in the Irishman that Dubois had not and asked the rector to reconsider. So Hughes began his studies in September 1820, graduating and receiving ordination to the priesthood in 1826. He was first assigned to the diocese of Philadelphia.

Anti-Catholic propaganda was everywhere in the City of Brotherly Love. Hughes’s temperament favored the raised fist more than the turned cheek. So when, in 1829, a Protestant newspaper attacked “traitorous popery,” Hughes denounced its editorial board of Protestant ministers as “clerical scum.” And after scores of Protestant ministers fled the 1834 cholera epidemic, which Nativists blamed on the Irish, Hughes ridiculed the ministers—”remarkable for their pastoral solicitude, as long as the flock is healthy….”

In 1835, Hughes won national fame when he debated John Breckenridge, a prominent Protestant clergyman from New York. Breckenridge conjured up the Inquisition, proclaiming that Americans wanted no such popery, no loss of individual liberty. Hughes described the Protestant tyranny over Catholic Ireland and the scene at his sister’s grave. He said he was “an American by choice, not by chance…born under the scourge of Protestant persecution” and that he knew “the value of that civil and religious liberty, which our…government secures for all.” The debate received enormous publicity, making Hughes a hero among many American Catholics. It was noted in Rome.

Dubois, who had left St. Mary’s to become bishop of New York, suffered a series of blows to his health. Hughes was barely forty. Nevertheless, in January 1838, he was appointed co-adjutor bishop—assuring him the succession to Dubois—and was consecrated in the old St. Patrick’s Cathedral on Mott Street. To the older man, it was a terrible humiliation to see a man he had deemed unqualified for the priesthood succeed him. When Dubois died, in 1842, he was buried at his request beneath the doorstep of Old St. Patrick’s Cathedral so the Catholics of New York might step on him in death as they had in life.

Hughes’s first order of business to gain control of his own diocese. Under state law, most Catholic churches and colleges were owned and governed by boards of trustees—laymen, elected by a handful of wealthy pew holders (parishioners who couldn’t afford pew rents couldn’t vote), who bought the property and built the church. When, in 1839, the trustees of Old St. Patrick’s Cathedral had the police remove from the premises a new Sunday school teacher whom Dubois had appointed, Hughes called a mass meeting of the parish. He likened the trustees to the British oppressors of the Irish, thundering that the “sainted spirits” of their forebears would “disavow and disown them, if…they allowed pygmies among themselves to filch away rights of the Church which their glorious ancestors would not yield but with their lives to the persecuting giant of the British Empire.” He later said that by the time he had finished speaking, many in the audience were weeping like children. He added, “I was not far from it myself.”

The public schools were then operated by the Public School Society, a publicly funded but privately managed committee. They favored “non-denominational” moral instruction, reflecting a serene worldview that Protestantism was a fundamental moral code and the basis of the common culture. In fact, as Hughes biographer Father Richard Shaw pointed out, “the entire slant of the teaching was very much anti-Irish and very much anti-Catholic.” The curriculum referred to deceitful Catholics, murderous inquisitions, vile popery, Church corruption, conniving Jesuits and the pope as the anti-Christ of Revelations.

Bishop Dubois had advised Catholic parents to keep their children out of the public schools to protect their immortal souls. But Hughes understood the need for formal education among the poor. He demanded that the Public School Society allocate funds for Catholic schools: “We hold…the same idea of our rights that you hold of yours. We wish not to diminish yours, but only to secure and enjoy our own.” He concluded by warning that should the rights of Catholics be infringed, “the experiment may be repeated to-morrow on some other.”

On October 29, 1840, a public hearing was held at City Hall, with numerous lawyers and clergymen representing the Protestant establishment and Hughes the Catholics. Hughes opened with a three-and-a-half-hour spellbinder. The Protestants spent the next day and a half insulting Hughes as an ignorant ploughboy and demonizing Catholics “as irreligious idol worshippers, bent on the murder of all Protestants and the subjugation of all democracies,” according to historian Ray Allen Billington. The City Council denied his request.

With elections less than a month away, Hughes created his own party, Carroll Hall, named for the only Catholic signer of the Declaration of Independence. He ran a slate of candidates to split the Democratic vote, thereby punishing the Democrats for opposing him. The Democrats lost by 290 votes. Carroll Hall had polled 2,200.

In April 1842 the Legislature replaced the Public School Society with elected school boards and forbade sectarian religious instruction. When the Whigs and Nativists had the King James version declared a non-sectarian book, Hughes set about establishing what has become the nation’s major alternative to public education, a privately funded Catholic school system. He would create more than 100 grammar and high schools and help found Fordham University and Manhattan, Manhattanville and Mount St. Vincent Colleges.

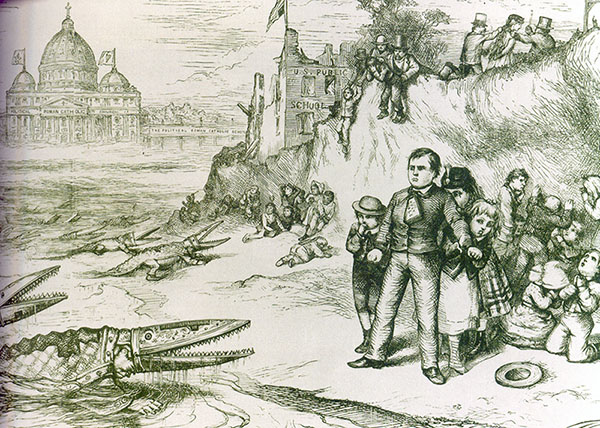

Anti-Catholicism had gained legitimacy by the 1840s. Now the Nativist movement included not only Protestant fundamentalists who saw Catholicism as Satan’s handiwork, but also intellectuals—like Mayor James Harper, of the Harper publishing house—who considered Catholicism incompatible with democracy. All hated the Irish. Harper’s described the “Celtic physiognomy” as “simian-like, with protruding teeth and short upturned noses.” Their cartoonist, Thomas Nast, caricatured the Irish accordingly.

Between May and July of 1844, Nativist mobs in Philadelphia, summoned to “defend themselves against the bloody hand [of the Pope],” ransacked and leveled at least three churches, a seminary and nearly the entire Catholic residential neighborhood of Kensington. When Hughes learned a similar pogrom, beginning with an assault upon Old St. Patrick’s Cathedral, was planned in New York, he called upon “the Catholic manhood of New York” to rise to the defense of their churches and he armed them. A mob that stoned the stained glass windows of the cathedral found the building full of riflemen, and the violence went no further. Hughes later wrote that there had not been “a [Catholic] church in the city…not protected with an average force of one to two thousand men-cool, collected, armed to the teeth….”

Invoking the conflagration that kept Napoleon from using Moscow as his army’s winter quarters, Hughes warned Mayor Harper that if one church were attacked, “should one Catholic come to harm, or should one Catholic business be molested, we shall turn this city into a second Moscow.” New York’s buildings were largely wooden, and the city had burned twice in the previous century. There were no riots.

On July 19, 1850, Pope Pius IX created the archdiocese of New York, a development reflecting the growth of both the city’s Catholic population and the influence of Hughes himself. Having received the white woolen band of an archbishop from the hands of the Supreme Pontiff, Hughes embarked on a new project, “…a cathedral…worthy of our increasing numbers, intelligence and wealth as a religious community.” On August 15, 1858, before a crowd of 100,000, he laid the cornerstone of the new St. Patrick’s Cathedral at 5th Avenue and 51st Street. He would not see it finished. On January 3, 1864, death came for the archbishop.

After Maria Monk gave birth to a second illegitimate child, her Protestant champions quietly abandoned her. She became a prostitute, was arrested for pickpocketing and died in prison. Her book is still in print.

Even when whales did fight back, moreover, they did so in a predictable, time-honored fashion—with their jaws and tails. But this whale had rammed the ship with its head—not once but twice—and had gnashed its teeth “as if distracted with rage and fury,” as first mate Owen Chase wrote in his account of the ordeal. Chase thought the whale’s behavior a result of cool reasoning, that it had attacked the ship in the manner “calculated to do us the most injury,” knowing that the combined speeds of two objects would be greatest in a head-on collision and the impact therefore most destructive. The image of the enraged, vengeful whale is the cornerstone of Moby Dick, of course. It’s what lies behind Ahab’s quest and his obsession, a point so obvious that it only needs to be made in passing. (“To be enraged with a dumb thing, Captain Ahab, seems blasphemous,” says Starbuck, but mostly so that Ahab can answer, “I’d strike the sun if it insulted me.”) Ahab regards Moby Dick much as the crew of the Essex seem to have viewed the whale that attacked them, as a creature capable of rational action. One of the fascinating questions Philbrick raises, though, if only by implication, is where so weirdly modern a notion could possibly have come from. The Nantucketers who had harvested whales for generations, he points out, saw their vocation as part of the Divine Plan. But to ascribe rage to an object of one’s own aggression is to come perilously close to admitting a sense of guilt. You cannot, after all, discern anger or moral outrage in a fellow being unless you also grant it a point of view.

Even when whales did fight back, moreover, they did so in a predictable, time-honored fashion—with their jaws and tails. But this whale had rammed the ship with its head—not once but twice—and had gnashed its teeth “as if distracted with rage and fury,” as first mate Owen Chase wrote in his account of the ordeal. Chase thought the whale’s behavior a result of cool reasoning, that it had attacked the ship in the manner “calculated to do us the most injury,” knowing that the combined speeds of two objects would be greatest in a head-on collision and the impact therefore most destructive. The image of the enraged, vengeful whale is the cornerstone of Moby Dick, of course. It’s what lies behind Ahab’s quest and his obsession, a point so obvious that it only needs to be made in passing. (“To be enraged with a dumb thing, Captain Ahab, seems blasphemous,” says Starbuck, but mostly so that Ahab can answer, “I’d strike the sun if it insulted me.”) Ahab regards Moby Dick much as the crew of the Essex seem to have viewed the whale that attacked them, as a creature capable of rational action. One of the fascinating questions Philbrick raises, though, if only by implication, is where so weirdly modern a notion could possibly have come from. The Nantucketers who had harvested whales for generations, he points out, saw their vocation as part of the Divine Plan. But to ascribe rage to an object of one’s own aggression is to come perilously close to admitting a sense of guilt. You cannot, after all, discern anger or moral outrage in a fellow being unless you also grant it a point of view.  A Perfect Storm had all of those elements, but it was imbued by a sort of “Wreck-of-the-Hesperus” mentality—the kind of thinking according to which, say, if the young lady lashed to the mast in the Longfellow poem had not been possessed of “a bosom white as the hawthorne buds that ope in the month of May,” the whole incident would have been somehow less worthy of our attention. This was a movie that thought the Gloucester men lost at sea in a 1991 hurricane had to be played by movie stars like George Clooney and Mark Wahlberg in order for us to take an interest in them. It assumed that in order for their story to be tragic or poignant it would have to be established that one of them had a girlfriend and a mother who were going to miss him, that another one had a little boy who would be sad without his daddy, that a third, who never seemed to have much luck with women, had (irony of ironies) just met one with whom things might have worked out. I couldn’t understand it. How could you make a sea-disaster story so unutterably boring—particularly one based on a real-life incident? “Truth is boring,” said a friend, and I had no answer. It was only later that I realized what I ought to have said: “Truth is never boring; that’s reality you’re thinking of.”

A Perfect Storm had all of those elements, but it was imbued by a sort of “Wreck-of-the-Hesperus” mentality—the kind of thinking according to which, say, if the young lady lashed to the mast in the Longfellow poem had not been possessed of “a bosom white as the hawthorne buds that ope in the month of May,” the whole incident would have been somehow less worthy of our attention. This was a movie that thought the Gloucester men lost at sea in a 1991 hurricane had to be played by movie stars like George Clooney and Mark Wahlberg in order for us to take an interest in them. It assumed that in order for their story to be tragic or poignant it would have to be established that one of them had a girlfriend and a mother who were going to miss him, that another one had a little boy who would be sad without his daddy, that a third, who never seemed to have much luck with women, had (irony of ironies) just met one with whom things might have worked out. I couldn’t understand it. How could you make a sea-disaster story so unutterably boring—particularly one based on a real-life incident? “Truth is boring,” said a friend, and I had no answer. It was only later that I realized what I ought to have said: “Truth is never boring; that’s reality you’re thinking of.”  Is Moby Dick Ahab’s muse or his nemesis? Does the blasphemy consist in making the whale human, or is it the other way around? And God Created Great Whales pokes fun at creative impulses; at the same time, it has great sympathy for its protagonist. It’s as though Eckert were saying, “Yeah, trying to turn Moby Dick into an opera is dumb, maybe. But it’s better than not having the urge to turn Moby Dick into an opera.” The attempt results in some foolish moments; it also produces moments of great beauty that may or may not owe anything to Melville. I’ve always had a soft spot for the John Huston version of Moby Dick. Most of my favorite bits never occur in the book at all: the prophecy, the wonderful scene where another captain begs Ahab to suspend the hunt and help search for his lost son instead, the scene where Queequeg comes out of his trance because someone is threatening Ishmael. None of that stuff is in the book. I don’t care. I love it. What I love best, though, is something that is in the book, only in a different form. It’s what was lacking in that silly George Clooney movie—so much so that one can understand Billy Tyne’s family wanting to sue the filmmakers. They’re absolutley right. They’re saying the movie version of A Perfect Storm turned Billy Tyne into Ahab, but without giving us any inkling of the forces driving him on. For that you have to go to the John Huston film. You see it on the face of an old sailor with a concertina, playing a few notes of a wistful air, and on the faces of the women standing on the dock as the Pequod pulls away—a sense of tragic inevitability.

Is Moby Dick Ahab’s muse or his nemesis? Does the blasphemy consist in making the whale human, or is it the other way around? And God Created Great Whales pokes fun at creative impulses; at the same time, it has great sympathy for its protagonist. It’s as though Eckert were saying, “Yeah, trying to turn Moby Dick into an opera is dumb, maybe. But it’s better than not having the urge to turn Moby Dick into an opera.” The attempt results in some foolish moments; it also produces moments of great beauty that may or may not owe anything to Melville. I’ve always had a soft spot for the John Huston version of Moby Dick. Most of my favorite bits never occur in the book at all: the prophecy, the wonderful scene where another captain begs Ahab to suspend the hunt and help search for his lost son instead, the scene where Queequeg comes out of his trance because someone is threatening Ishmael. None of that stuff is in the book. I don’t care. I love it. What I love best, though, is something that is in the book, only in a different form. It’s what was lacking in that silly George Clooney movie—so much so that one can understand Billy Tyne’s family wanting to sue the filmmakers. They’re absolutley right. They’re saying the movie version of A Perfect Storm turned Billy Tyne into Ahab, but without giving us any inkling of the forces driving him on. For that you have to go to the John Huston film. You see it on the face of an old sailor with a concertina, playing a few notes of a wistful air, and on the faces of the women standing on the dock as the Pequod pulls away—a sense of tragic inevitability.